We’ve just released the Marks & Clerk AI Report 2025, highlighting a massive surge in patent activity around Quantum AI (QAI). At the same time, a new review led by NVIDIA explores how classical AI is accelerating quantum computing development. When people talk about quantum computing (QC) and artificial intelligence (AI), the conversation often drifts toward a sci-fi future where quantum computers turbocharge AI models. But this research suggests the reality is far more immediate — and far more practical.

Instead of quantum computers powering AI, AI is helping to build quantum computers.

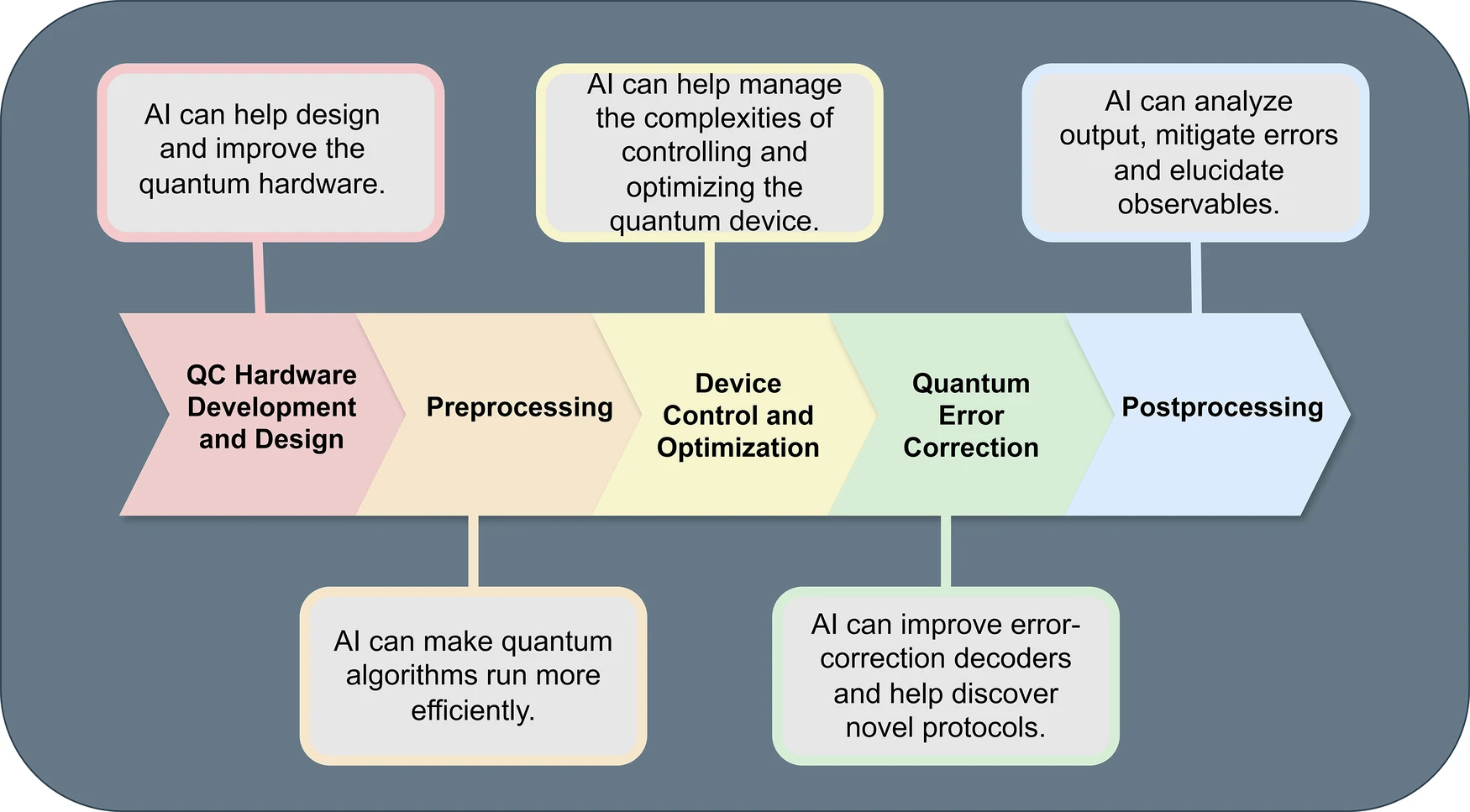

Quantum hardware is notoriously difficult to design and operate. Traditional engineering approaches struggle with this complexity, but AI techniques like reinforcement learning and generative models are proving to be game-changers when it comes to designing optimal qubit layouts and circuit geometries, calibrating and controlling quantum devices, and managing real-time error correction.

This intense focus on hardware engineering resonates with themes identified in our recently released Marks & Clerk AI Report 2025. Our report highlighted the massive surge in Quantum AI patent filings, while noting the ongoing challenge applicants face in demonstrating sufficient "technical character" to patent examiners. The hardware developments detailed in the review article provide concrete examples of the highly technical innovation occurring at this intersection.

Looking ahead, the researchers predict the formation of a symbiotic ‘feedback loop’. They argue that classical AI supercomputers are necessary right now to build fault-tolerant quantum processors, and those quantum processors will eventually be needed to run the energy-intensive AI models of the future. For innovators, this is a moment to watch closely. The convergence of these two fields is creating patentable technologies today, and laying the groundwork for tomorrow’s breakthroughs.

Read the full analysis and see the data behind the trends in our AI Report here.

Many of QC's biggest scaling challenges may ultimately rest on developments in AI

/Passle/6130aaa9400fb30e400b709a/SearchServiceImages/2026-02-26-15-54-56-695-69a06cd03a6e69b01f61e487.jpg)

/Passle/6130aaa9400fb30e400b709a/SearchServiceImages/2026-02-25-16-00-40-354-699f1ca8de0dd5cefa7018ad.jpg)

/Passle/6130aaa9400fb30e400b709a/SearchServiceImages/2026-02-25-14-26-49-397-699f06a9b43e0ac2b315fb6d.jpg)